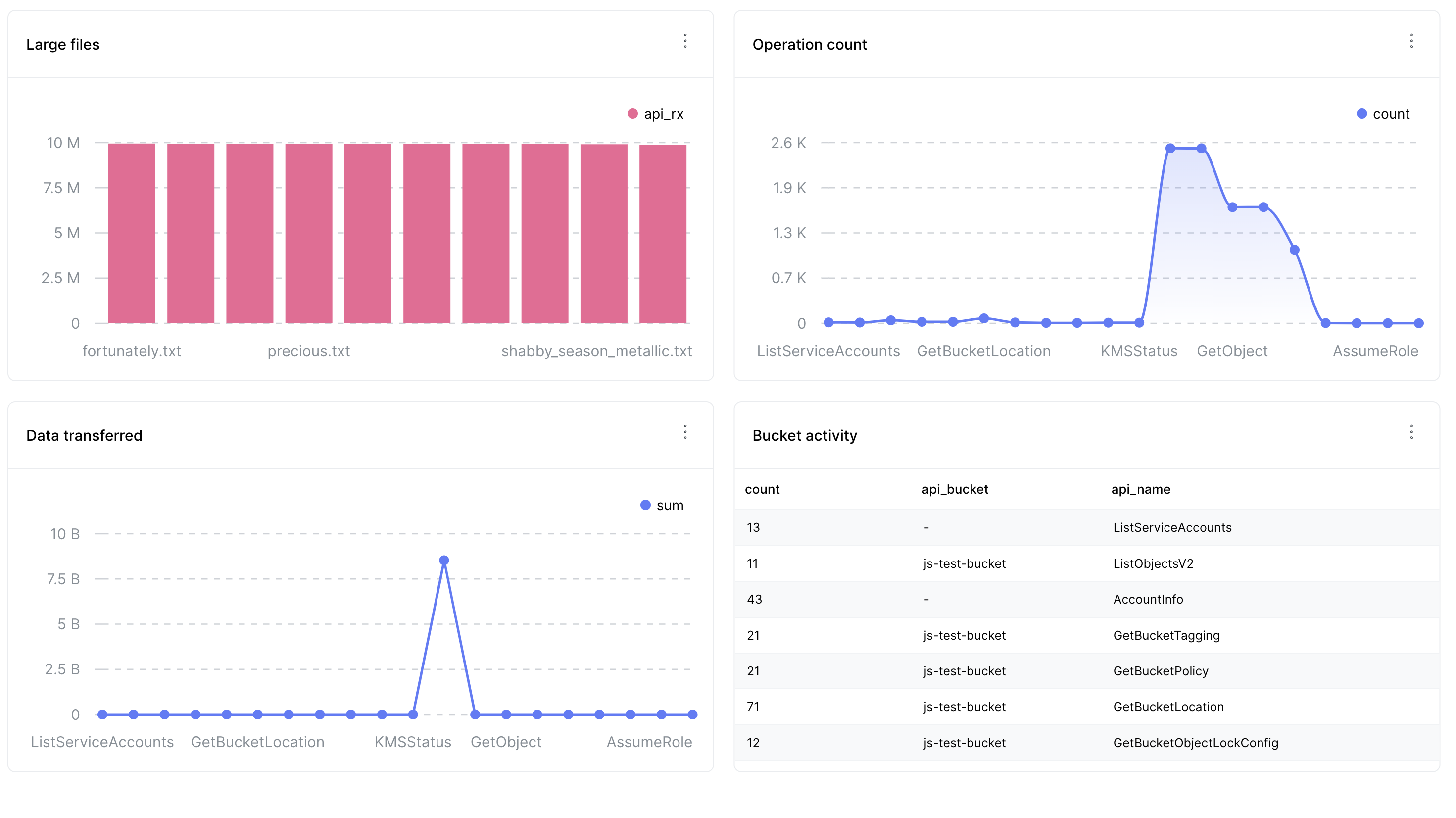

Parseable as logging target for MinIO Audit & Debug logs

As new architecture patterns like separation of compute and storage evolve, object storage is increasingly the first choice for applications to store primary (non-archival) data. This is in addition to the old use cases of archival and large blob storage.

MinIO is an open-source, AWS S3-compatible object storage platform, allowing developers to use one of the most common object-based storage options without vendor lock-in and with flexibility.