Distributed traces are a form of telemetry signal consisting of spans. Each span is essentially an event (an operation) with fields like service name, operation (span name), timestamps, duration, and key/value attributes. Traces (chains of spans) can contain billions of events in a large system. Efficient on-disk formats are critical for modern systems that generate TBs worth of traces per day.

An observability platform must retain high-granularity trace data while allowing fast, flexible queries. Columnar formats like Apache Parquet shine in this context: they store each field (column) separately, making scans and filtering much faster than row-by-row storage. In Parquet, a query that filters on a span attribute (e.g. service name) can scan only the service column, skipping all other fields entirely. This reduces I/O and accelerates span queries.

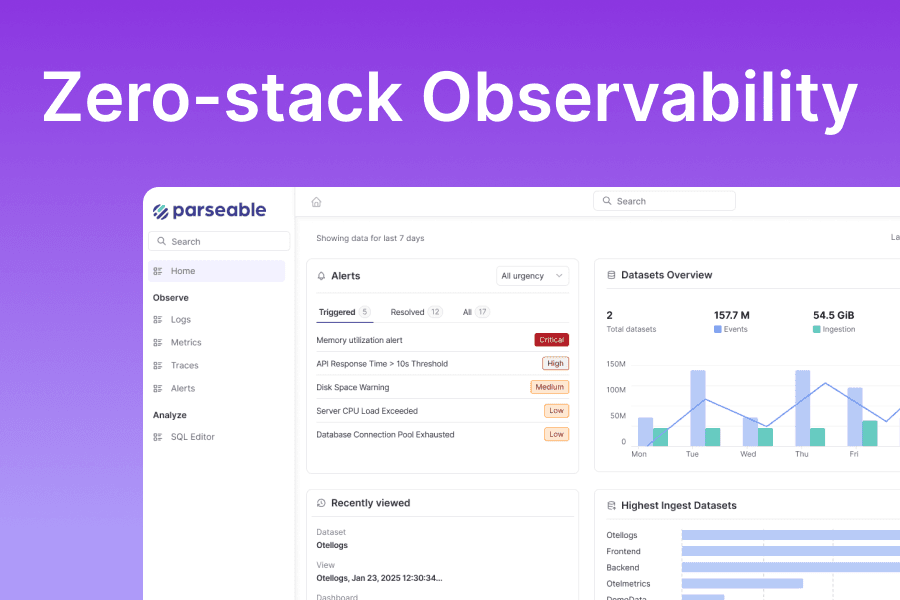

While Parquet provides the core framework for efficient and compressed storage. Parseable combines it with efficient metadata management, smart caching, Rust based cloud native design to bring a high performance observability system.

In this post, we share our learnings from ingesting OTEL Traces into Parseable and looking at the raw Parquet files and their behaviour.

Deep-dive into Traces

At a fundamental level, a trace represents a tree of spans, capturing the full journey of a request as it moves through different components of a distributed system.

- Each trace starts with a root span, representing the initial entry point, for example, a user request hitting an API Gateway.

- Every subsequent span records an operation triggered during the processing of that request, database queries, service-to-service calls, cache lookups, and more.

Spans maintain a simple relationship model, each span holds a parent_span_id pointing to the span that triggered it, forming a hierarchical tree.

In pure graph theory terms, a trace is a directed acyclic graph (DAG), but operationally it behaves like a tree:

- Single Root: There is exactly one starting point: the root span, which has no parent.

- Directed Edges: Child spans always point back to their immediate parents.

- Acyclic Nature: No loops or cycles are allowed, a span cannot become the ancestor of itself.

| trace_id | span_id | parent_span_id | service_name | operation_name |

|---|---|---|---|---|

| abc123 | 1 | NULL | API Gateway | GET /login |

| abc123 | 2 | 1 | Auth Service | Validate Token |

| abc123 | 3 | 1 | User Service | Fetch Profile |

| abc123 | 4 | 3 | DB Service | SQL Query |

This simple table represents a trace tree where:

- Span 1 is the root.

- Spans 2 and 3 are children of span 1.

- Span 4 is a child of span 3.

This tree structure captures both temporal (when spans happen) and causal (why spans happen) relationships.

The mathematical depth of a trace (the longest path from root to leaf) often correlates with request complexity:

- A deep trace may signal heavy service chaining.

- A wide trace with many immediate children can indicate parallelized operations.

Storage In ParseableDB

In storage systems like Parseable, however, we flatten this tree structure.

Each span becomes a row in a table, with columns like trace_id, span_id, and parent_span_id helping reconstruct the tree during query or visualization.

Because the parent-child relationship is stored as simple ID links, you don't need a graph database to store traces. You just need a storage format that:

- Preserves the relationships (via IDs).

- Allows fast filtering (e.g., find all spans belonging to

trace_id = xyz). - Supports efficient traversal (rebuilding the tree in memory when needed).

Why Columnar Storage Helps

Selective Queries (a.k.a. Only Read What You Need)

In Parquet, data is organized column by column, not row by row.

If you need to find all spans from a specific service (say, "auth-service"), you don’t have to read the entire span structure.

You only read the service_name column.

Thanks to predicate pushdown and column pruning, Parquet can skip irrelevant parts of the file altogether, often reducing disk I/O by 90%+.

In Parseable, this massively speeds up common queries like "find all error spans" or "filter spans where http.status_code >= 500."

Compression and Redundancy (The Math Behind It)

Trace data is naturally redundant , many spans share the common attributes like service name, operation, status, etc.

In Parseable:

- Well-known attributes (like service.name, span.name, status.code, duration_ms) are mapped into their own dedicated columns at ingestion time.

- Remaining dynamic or custom attributes are grouped under a generic other_attributes column as a flexible key-value store.

- Instead of relying purely on Parquet's internal dictionary encoding, we apply lightweight string matching during ingestion to categorize and organize attributes efficiently.

Attribute Splitting in Parseable

| Field | Before (Raw Span) | After (Parsed in Parseable) |

|---|---|---|

service.name | "flagd" | service_name = "flagd" |

telemetry.sdk.language | "go" | sdk_language = "go" |

All others (e.g., rpc.system, rpc.service, net.peer.name, etc.) | Various dynamic fields | other_attributes → { "rpc.system": "connect_rpc", "rpc.service": "flagd.evaluation.v1.Service", "rpc.method": "ResolveAll", "net.peer.name": "172.18.0.26", "net.peer.port": "47150", ... } |

Explanation:

- Structured columns: Essential fields that are needed for fast querying (

trace_id,span_id,service_name,operation_name, etc.). - Other attributes: Less predictable or dynamic fields packed neatly inside a single column for flexibility.

This design ensures that common queries (e.g., "all spans where status_code != 200") are fast, while still allowing flexibility for exploring less common metadata.

Why does it matter?

In row-based storage (like JSON or CSV), every repeated value bloats the file. In columnar storage, low-entropy columns collapse beautifully.

Quick math:

- A 1 TB JSON file of spans could compress down to 130 GB in Parquet.

- Data scanned during queries drops by 99%.

- Queries speed up by 34×.

In fact, instead of facing a combinatorial explosion (product of all label combinations), Parquet stores just the sum of individual distinct values.

Example:

- Row-based: 5×3×50×10M = 7.5 billion combinations.

- Columnar: 5+3+50+10M = just ~10 million values.

At Parseable, this is crucial. It lets us handle high cardinality (think: service names, tags, versions) without monstrous indexes or memory footprints.

Better Disk I/O (Read Amplification Solved)

Since Parquet lays out each column contiguously, queries become large sequential reads rather than tiny random reads.

- In JSON, querying a field = reading the entire span each time.

- In Parquet, querying a field = reading just that column.

This directly cuts disk seek time and I/O overhead.

Combine that with Parquet’s built-in min/max stats, you end up skipping massive chunks of data entirely.

In short, in Parseable, a trace query only touches a tiny fraction of the data, exactly what’s needed, nothing else.

CPU Loves It Too (Vectorized Processing)

Columnar data is also CPU cache-friendly and SIMD-vectorization-friendly.

When we scan spans in Parseable (say, finding all spans with duration > 100ms), our engine can apply filters to thousands of values at once using CPU vector instructions.

End result? Trace queries are not just faster on disk, they’re faster in RAM and CPU too. Parquet’s design of row-groups means queries can use CPU-efficient algorithms on whole arrays of values.

Real-World Examples

- Parseable: The Parseable observability platform is explicitly built on a columnar-first design. As the Parseable team explains, they adopted Parquet for telemetry storage to enable “highly selective scans and predicate pushdowns”. Parseable’s object-store-first, diskless architecture relies on Parquet so that “the cardinality of one field doesn’t balloon memory usage or index size,” handling high-cardinality trace fields without massive in-memory indexes. Even while benchmarking Parseable against clickbench, this approach yields fast query with significantly reduced storage overhead compared to row-oriented indexes. In short, Parquet underpins Parseable’s ability to scale traces: the system only stores each span field once per block (often compressed), and queries touch only needed fields, not entire span records.

- Tempo’s use of Parquet proves that at scale (billions of spans) you can make rich trace queries without a heavy custom index: the Parquet ecosystem (Spark, Presto, Arrow) can even be used directly on the trace files.

- The OpenTelemetry project is exploring columnar transport formats (e.g. Apache Arrow) and columnar on-disk storage for telemetry. An OpenTelemetry blog notes that while OTLP traditionally uses protobuf, on the backend “this data is typically stored in a columnar format to optimize compression ratio, data retrieval, and processing efficiency”opentelemetry.io. In practice, many Jaeger-compatible systems and OTEL pipelines output spans to Parquet or similar (the OTEL collector even has proposals to write Parquet telemetry files). The common theme is that traces, like metrics and logs, benefit from the same columnar speedups: queries by span attributes become much faster and cheaper.

Conclusion

Columnar formats like Parquet match the needs of modern tracing. Spans are just rows of fields, and columnar storage makes it cheap to scan or filter by any field. Parquet’s high compression on repetitive span attributes cuts storage costs, and its ability to skip unused data chunks slashes query latency.

For Parseable, using Parquet in its tracing pipeline lets the system avoid giant indexes and focus CPU/IO only on relevant span fields, yielding very fast ad-hoc trace queries and efficient long-term storage.

In summary, Parquet’s columnar model addresses the mathematical challenges of high-cardinality trace data, reduces I/O overhead, and leverages data redundancy, making it a natural fit for scalable trace storage in observability systems.

What's Next?

If you're hitting the limits of your current observability stack, or just want a faster, more predictable way to explore traces, schedule a call with the Parseable team. We’ll walk you through how we built Parseable for efficiency at massive scale, and how you can too.