What are the Best Logging Practices? | Parseable

If you are a programmer, you might have come across various mainstream practices, one of them being logging. The process itself can act as your silent guardian and helps you record the changes and steps your application is taking. While there is no standardized rule book for you to refer, we have compiled a few rules of thumbs to start your journey with. Effective logging isn’t just about collecting data, it involves a well-defined strategy. Consider this blog post as your beginner’s guide to logging practices.

What is logging?

Logging refers to the process of recording events and data points within a software application. These entries provide a system of record of what transpired, serving as a crucial resource for debugging, monitoring, and security analysis. Applications typically log various types of information, including:

- Application logs: Track the application's internal workings, recording successes and failures.

- Security logs: Capture security-related events, such as login attempts and access control checks (also called audit logs).

- System logs: Record events within the operating system or underlying infrastructure.

Read our previous blog on the history of logging ecosystem here.

Why is logging important?

Effective logging offers a multitude of benefits, including:

- Troubleshooting and debugging: Logs show the path. The developers can pinpoint the root cause of any issue quickly if logs are well maintained.

- Monitoring and observability: By analyzing logs, you gain valuable insights into application performance and system health.

- Security and compliance: They act as a record for your security events as well. This ultimately helps in incident detection and makes sure to comply with regulations.

- Performance tuning: Logs can reveal performance bottlenecks, allowing for targeted optimization efforts.

Best practices for effective logging

While it is quite difficult to generalize, there are a few major best practices that are almost always a good choice follow. Here are some of the best practices for effective logging:

Log everything relevant

While comprehensive logging is essential, striking a balance is key. Aim to capture critical events like errors, warnings, and informational messages. Avoid excessive logging of trivial details, which can bloat log files and impact performance.

Use a consistent format

Logging is quite often thought of as unstructured data by developers. On the contrary, in my experience it is important to adopt a consistent format for log entries early on. This makes it easier to parse and analyze logs, especially when using automated tools. A consistent structure means that you can easily search, filter, and analyze logs.

Some standard columns that are almost always good to have include timestamps, log levels (DEBUG, INFO, WARN, ERROR, FATAL), clear messages, and relevant metadata.

Initiatives like OpenTelemetry (OTEL) offer a framework for unifying formats and various signals like metrics, traces, and logs for application monitoring. The USP is providing a vendor-neutral way to instrument your code and send data to various backends.

Log levels and their importance

Log levels categorize the severity of logged events. Use DEBUG for detailed troubleshooting information, INFO for general application events, WARN for potential issues, ERROR for actual errors, and FATAL for critical failures. Choose the appropriate level to convey the importance of the log entry.

Include contextual information

Enrich your logs with metadata like user IDs, transaction IDs, and timestamps. This context allows you to correlate logs across distributed systems and gain a deeper understanding of events.

Avoid logging sensitive information

Be mindful of including sensitive data like passwords, credit card numbers, or personally identifiable information (PII) in your logs. Consider masking or encrypting such data before logging.

Implement log rotation and retention policies

Log files can grow large over time. Implement log rotation to create new files periodically, preventing disk space exhaustion. Establish a log retention policy that dictates how long to store logs based on compliance requirements and your specific needs.

Use logging libraries and frameworks

Leverage established logging libraries like Log4j, Logback, or Serilog to simplify log management. These libraries offer features like log level configuration, formatting, and integration with various logging solutions.

Advanced logging techniques

When you are delivering commercial solutions, it is always suggested to do your best. So here are a few techniques to ace your logging game.

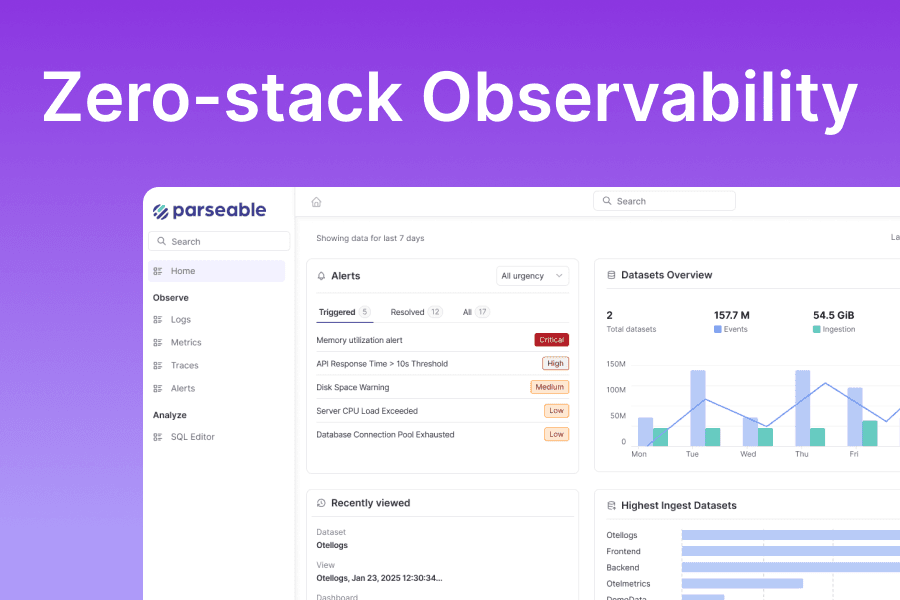

Centralized logging solutions

Centralized logging platforms like Parseable aggregate logs from various sources, enabling efficient collection, storage, and analysis. These tools provide powerful search and filtering capabilities to pinpoint specific events within vast log data.

Log aggregation and analysis

Tools and techniques serve you the best when it comes to aggregating logs from distributed systems, microservices, and containers. Parseable helps you store logs and query them - with columnar storage formats in memory (Arrow) and on disk (Parquet) to achieve high ingestion throughput and query performance.

Monitoring and alerting

Configure alerts based on predefined log patterns to receive real-time notifications of critical events. Integrate your logging system with monitoring tools like Prometheus and Grafana to create comprehensive dashboards for application health monitoring.

Using logging for security

Security-focused logging helps detect and respond to security incidents. By monitoring logs for suspicious activity, you can identify potential threats and take proactive measures. Logging also plays a vital role in adhering to security standards like PCI-DSS and GDPR.

Optimizing performance with logging

Logging can impact application performance. To minimize this overhead, consider techniques like asynchronous logging, where log messages are written in the background without blocking the main application thread. Batching logs can also improve efficiency by sending multiple entries at once.

Real world examples

Logging for high-traffic web application

High-traffic web applications require robust logging strategies to handle the deluge of requests and events. Challenges include:

- Balancing verbosity with performance: Capturing critical information without overwhelming log files is crucial.

- Distributed architecture: Logs from various servers, load balancers, and databases need efficient aggregation.

Solutions:

- Log level filtering: Configure log levels to capture only essential events.

- Asynchronous logging: Implement asynchronous logging to minimize performance impact.

- Centralized logging: Aggregate logs from all sources into a centralized platform for analysis.

Key takeaways:

- Prioritize logging critical information for troubleshooting and monitoring.

- Leverage asynchronous logging and centralized solutions for scalability.

Logging for microservices architecture

Microservices architectures introduce unique logging challenges due to the distributed nature of applications.

Best practices for logging in microservices:

- Standardized logging format: Ensure consistency across all microservices for easier correlation.

- Include service and operation IDs: Facilitate tracing requests through the microservices ecosystem.

- Log correlation IDs: Simplify associating logs from different microservices related to the same request.

Conclusion

By following these best practices, you can transform logging from a passive data collection process into a proactive tool for gaining deep insights into your applications. Parseable is here to help you deal with your logs, howsoever high the quantity be. Hence, you need not worry about the quantity; you should focus on the quality aspect. Remember, effective logging is an ongoing journey. Continuously evaluate your logging practices, refine your approach, and leverage advanced techniques to unlock the full potential of this essential tool. Happy Logging.