Log data is growing exponentially, and the boundary between log and analytical data is thinner than ever. Accordingly, logging ecosystem is evolving rapidly. In this post we look at various log collection, storage and value extraction systems in details. Read on for a deep dive.

Since very early on, applications and servers wrote log event to files on local disks. These files would often start small, but keep growing continuously. In order to retain log data of a fixed duration and to save disks from filling up, these files would be then rotated by tools like Logrotate.

Developers sshed into servers and grepped through these files to find logs of interest and debug issues. After certain time passed, this data wasn't useful anymore since the application has been running for a while now without issues. Accordingly, logrotate would be automatically rotate logs away and new data would be written to the same file. This worked well for a long time, and was the de-facto standard for logging for many years.

Even today, many teams use this approach. This is simple, works well and doesn't require any additional infrastructure. Why fix something that isn't broken?

The need for analysis

The rise of the Internet in the late 1990s and early 2000s brought about a new era. As web servers began to generate vast amounts of log data, people realized that log data from user facing applications like web servers are a great way of understanding user behavior.

Analysis couldn't be achieved by grepping through files. Log messages now needed a predefined structure and new tools to visualize patterns. This gave birth to tools such as Apache Log Analyzer and WebTrends. Webmasters could now analyze log data to gain insights into user traffic and behavior, as well as identify technical issues.

Too many logs and too many types

Within few years from early web server oriented use cases, log data took over several dimensions. Logs by now is a blanket term meaning different things in different contexts. There are many well established types of log data - application logs, infrastructure logs, security logs, audit logs, and network logs.

Each of these types of have their own unique characteristics and use cases. For example, application logs are typically generated by the application itself and are used to debug and troubleshoot application issues. Infrastructure logs are generated by the infrastructure components such as operating systems, virtualization, and containers.

Security logs are generated by security devices such as firewalls, intrusion detection systems, and web application firewalls. Audit logs are generated by the audit system and are used to track user actions and system changes. Network logs are generated by network devices such as routers, switches, and load balancers. All of these offer important insights for different teams and business units.

Realization for Centralization

By late 2000s, early 2010s, Developers and SysAdmins were already ingesting humongous volumes of logs for several use cases - most importantly for troubleshooting, pattern analysis, security and compliance. Additionally, it was clear that unifying all logs in a single system yielded best results.

The log unification meant log data volumes were beyond a single server's capacity. With emergence of scalable, distributed big data technologies such as Hadoop during this time, a lot of log data ended up on Hadoop. Apache Flume was the leading Hadoop based log aggregation tool. Once data was ingested on Hadoop, it was then analyzed using tools like Apache Hive, Apache Pig and Apache Spark.

Eventually, specialized tools for centralized log search came up - Splunk and ElasticSearch being the most notable ones. These products were capable of ingesting thousands of logs per seconds, index and store them on drives in a reliable and always available mode (replication). They also provided a powerful query language to search logs.

Present day

The most notable change in the logging ecosystem as compared to the early 2010s is the advent of fully managed (SaaS) offerings. Companies like DataDog, Dynatrace, Sumo Logic, New Relic etc have a fully cloud based offering for log collection, storage and analysis. These products are typically more expensive than open source alternatives, and the underlying selling point is the convenience of not having to manage the infrastructure. But this convenience comes at a cost of data lock-in, exorbitant cost and a general lack of flexibility.

In my personal experience, teams start with a fully managed offering, but as data grows and use cases around the data (within the org) grow, costs eventually become unsustainable. This leads to reducing retention, throttling on queries and dashboards. IMO this is a lost opportunity.

As promised, let's move to the modern logging ecosystem. In next few sections we'll take a look at general logging flow, all the components involved and how it all works together.

Logging Libraries

The first step for proper logging setup is a logging library. Typically the application development team chooses a logging library at the start of project. The decision is generally based on the programming language, memory allocation preferences, format availability (text, JSON etc) among others.

These libraries can emit logs in a predefined format. So, the next step is to define an initial format that developers across the team agree on. This format does evolve as the project matures, but an agreed upon initial format is a great starting point.

Typically, the library is configured to emit logs on to the standard console (error or output) or to a file. This way, there is a level of abstraction between the logging library and the log agent or forwarder. Here is a list of common logging libraries:

| JavaScript | Python | Go | Java | Rust |

|---|---|---|---|---|

| Winston | Log | glog | Std log | Log |

| Pino | Loguru | Logrus | Logback | slog |

| Bunyan | structlog | Zap | Log4j | - |

Forwarders and Agents

Next in the process is a logging agent or forwarder. Both agents and forwarders collect logs from application output (e.g. log file or console) and forward them to a central location - hence these terms are used interchangeably, but there are subtle differences.

Forwarders are typically very lightweight, run on each host and forward logs to a central location with little to no post collection processing. Examples are FluentBit, Filebeat, Logstash Forwarder, Vector etc.

Agents on the other hand are more sophisticated. They are typically deployed as a service on a dedicated host and can perform additional processing on the logs before forwarding them to a central location. This additional processing can include parsing, enrichment, filtering, sampling and more. Examples are FluentD, Logstash, Vector Aggregator etc. Many teams also use Kafka as an agent and processor before sending logs to a central location.

But what is the need for separate forwarders and agents? When should you use one over the other? Let's consider some scenarios:

- Forwarders run on the same host as the application. This means a forwarder shares resources with the application - and its impractical to run a resource hungry service on the same host as the application. Hence forwarders are pretty lightweight and usually just forward logs to a target. This lightweight nature of forwarders means they can't perform any additional processing on the logs. They can also not offer basic redundancy and failover capabilities.

- This is where agents come in. Agents are typically deployed as clustered service on dedicated hosts. Agents offer sophisticated buffering, failover and redundancy capabilities. They are also frequently used to filter PII (Personally Identifiable Information) from logs before forwarding them to a central location.

It is important to note here that in several cases forwarders are sufficient. But as use cases and the logging pipelines get more sophisticated, teams choose to deploy agents.

Logging Platforms

Logging platforms are the final step in a logging pipeline. These platforms are responsible for ingesting logs from forwarders and/or agents, storing them in a reliable and scalable manner and providing a powerful interface for text search or analytics as relevant.

Historically, log data was text oriented and the most common use case for a log store was to look for certain text snippets or patterns. Hence text search engines based on indexed log entries were used as logging platforms. These search engines could ingest events, index them and the store multiple copies of the event (and index) across servers for redundancy. Elastic and Splunk being the most popular ones. There are modern alternatives to these search engines - MeiliSearch, Tantivy, TypeSense etc.

If the log data is expected to be a wall ot text per log event, by search engine based approach is by all means the best approach. Since you as the user would be interested in the finding that needle out of the text haystack.

With observability taking the front seat, most modern applications now emit semi-structured or structured logs. These logs are typically JSON or some other structured format and easier to parse, analyze and visualize. In such cases log analytics platforms are a better choice. These platforms are capable of ingesting logs, parsing them and storing them in a format that is optimized for analytics.

Clickhouse while being an OLAP database is quite often used for log analytics use case. Most fully managed platforms (like DataDog, Dynatrace, NewRelic, Sumo Logic et al.) are also build around the log analytics paradigm.

So, how do you decide which approach to take. Let's dig into the pros and cons of each approach.

Search Engines

Search engines are great at quickly returning a text snippet or a pattern from humongous volumes of text data. This means if you're looking for an error message or a pattern that doesn't match other access pattern - search engines are a great choice.

The problem lies in the ingestion. Indexing is a resource intensive process. As log volumes grow and grow, indexing all of the log data before ingesting it is highly compute intensive and this in turn slows down the ingestion process itself.

Another common issue is that indexing generates index metadata - and at high enough log volume, metadata can outgrow the actual data. Now, the way these systems work is that all this data and the index metadata has to be replicated across servers to ensure data availability and redundancy. This adds to the overall storage and network requirements - eventually making the system difficult and costly to scale.

Analytics Databases

With major logging libraries and vendor neutral frameworks like OpenTelemetry standardizing on structured logs - analytics databases are a getting popular. People realize that this approach is a better fit for structured log data observability.

Analytics databases are designed to get you answers around your log data. For example, rate of status 500 returned over last 10 minutes, average response time for a particular endpoint over last 24 hours, and so on.

Structured logs also offer a log to metrics pathway. Fields in the structured logs can be used to derive aggregates and this can be used to generate metrics. This is a great way to get a quick overview of the health of your application - with minimal effort.

Due to relatively young nature of structured logging, users don't have great open source options. Hence several users turned towards OLAP databases like Clickhouse. Clickhouse is a columnar database that is designed for analytics - but this is not built for log specific use cases and has missing features like alerting, anomaly detection, event drill down etc.

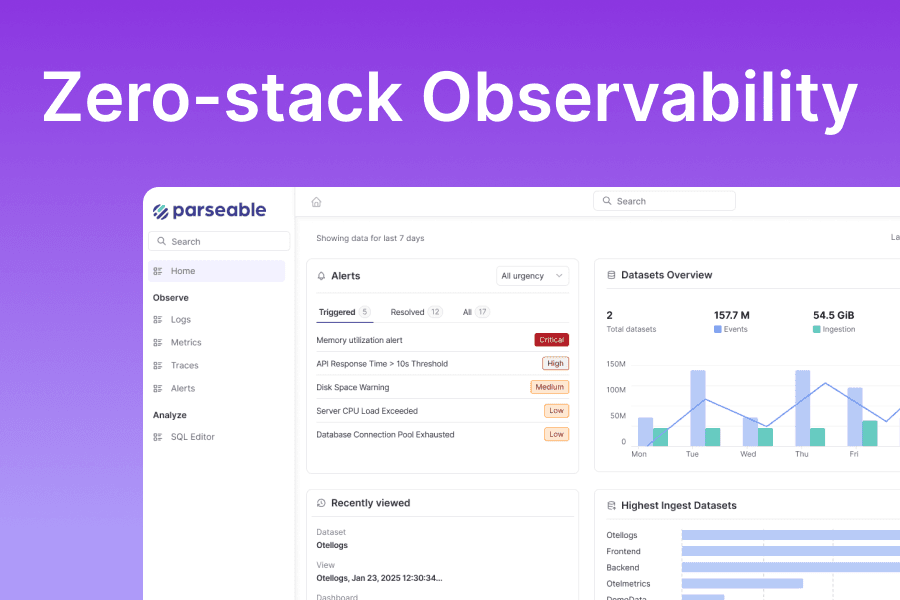

This is one of they key reasons we started building Parseable. We wanted to build a unified observability platform that is optimized for all kinds of telemetry and offers a great user experience. Read more on this on the introduction blog post.

Summary

In this post we covered the basics of logging and the various components of a logging pipeline. We also looked at the pros and cons of search engines and analytics databases. I hope this post was helpful and you learned something new.

Parseable community hangs out at Slack and GitHub. To learn more about Parseable, check out the documentation.