Audit logs are core components of security and observability in Kubernetes. This post explains how to ingest and store Kubernetes audit logs in Parseable. Additionally, we'll see how to setup alerts on these logs to get notified when a specific event occurs. In this example, we'll setup an alert to get notified when a user (service-account) accesses a secret.

We will demonstrate how Parseable stores audit logs, with Vector as the agent. But, before that, lets understand audit logs.

Kubernetes Access Logs Pre-requisites

- Kubernetes cluster with admin access. If you don't have one, you can create a cluster using Minikube or Kind.

kubectlinstalled on your machine.

Kubernetes audit logs

Kubernetes Audit is a recorded documentation of a sequence of actions occurring in the Kubernetes cluster, in chronological order. The Kubernetes cluster logs the following activities:

- All activities initiated by a user.

- Application generated events that use Kubernetes API.

- Kubelet activity.

- Scheduler activity.

- All events generated by the control plane.

Kubernetes Audit logs come in very handy to tell you what happened, when it started, and who did it. But by default, a Kubernetes cluster doesn't have audit logs enabled. It needs to be enabled by creating an audit policy and mentioning it in the kube-api server configuration file.

Kubernetes Audit Logging Working

The life cycle of a Kubernetes audit record starts inside the kube-api server. Each request generates an audit event at each stage of execution, which is eventually pre-processed with a specific policy and written to a backend. The audit policy instructs what type of data should be recorded and these records are stored in the backends.

Kubernetes Audit policy

Audit policy defines a set of rules, which specifies which event should be recorded and what data should be included in Kubernetes audit logs. When any event occurs it matches with the set of rules defined in the policy.

Enable auditing in Kubernetes

To enable auditing in Kubernetes, we need to create an audit policy and mention it in the kube-api server configuration file. The audit policy is a YAML file that defines the rules for logging events. Refer this link to know more about audit policy.

Follow this hands-on tutorial to enable auditing in your Kubernetes cluster: Kubernetes Audit Solution with Parseable

Kubernetes auditing with Parseable

In this post we'll see how to configure Vector to ingest the Kubernetes audit logs to Parseable. Then we'll look at how to configure Parseable to generate alerts whenever there is an unauthorized access to a Kubernetes secret. Here is the high level architecture of the implementation

Parseable installation on Kubernetes Cluster

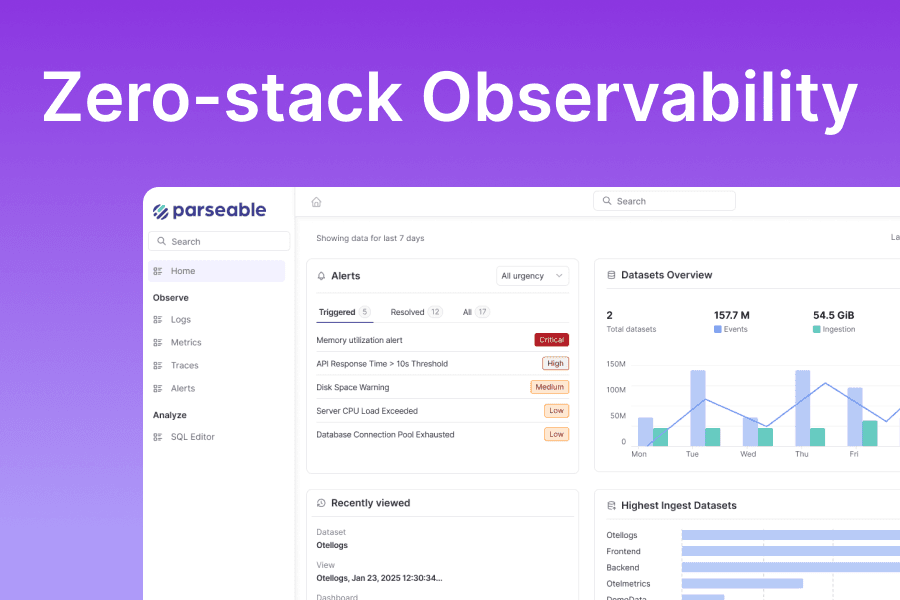

Parseable is a lightweight, high-speed, logging and observability platform for cloud-native applications. Follow the installation guide to install Parseable on your Kubernetes cluster. Once installation is done, verify the Parseable pods are running in the parseable namespace.

kubectl get pods -n parseable

Now, the application is ready and exposed on relevant post. We'll now use Vector, and configure Kubernetes audit logs as its data source, and sink it to the Parseable server.

Vector installation

We’ll install Vector via Helm. We’ll use values-k8s-audit.yaml file attached with the relevant configuration details.

helm repo add vector https://helm.vector.dev

wget https://www.parseable.com/blog/vector/values-k8s-audit.yaml

helm install vector vector/vector --namespace vector --create-namespace --values values-k8s-audit.yaml

kubectl get pods -n vector

With this values file, Vector will collect logs from the /var/log/audit/audit.log file and sends these events to Parseable auditdemo log stream. Once this is done, you can verify the log events in Parseable Console.

Track unauthorized Kubernetes access with Parseable

While there are several inherent benefits of using Kubernetes audit logs and Parseable, we will focus on one of the more interesting use cases, i.e. auditing Kubernetes secrets.

Secrets in Kubernetes as are only encoded, not encrypted by default. Due to this, there is always a concern around unauthorized access to secrets.

To demonstrate this issue, we are going to create a secret. We'll create two Service Accounts and by mistake, we will give the secret read/write access via RBAC Policy to both service accounts instead of one. We'll create two pods using a custom service account and read the secret from the pods using a custom service account. Also, we will set alerts for unauthorized secret access to one of the service accounts through Parseable.

Create a Secret

We'll encode our secret data like username and password.

echo -n parseable | base64

echo -n parseable@123 | base64

Using the above encoded data, create a yaml file (secret.yaml). Apply the file to create a secret from this manifest.

# secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: cGFyc2VhYmxl

password: cGFyc2VhYmxlQDEyMw==

kubectl apply -f secret.yaml

kubectl get secrets

Create Service Accounts

Create service accounts to be used to access the secret.

kubectl create sa authorized-user

kubectl create sa unauthorized-user

kubectl get sa

We are required to assign a role to the authorized-user service account to manage secrets. Additionally, we will give access to the other service account unauthorized-user as well (to simulate unauthorized access).

Set RBAC Policies

- Create a role and set RBAC Policy by applying the role.yaml configuration file.

# role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: deployment-manager

rules:

- apiGroups: ["","apps"] # "" indicates the core API group

resources: ["pods","deployments","replicasets","configmaps", "secrets"]

verbs: ["get", "watch", "list","create","update"]

kubectl apply -f role.yaml

- Assign the above role to both Service Accounts.

# role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: deployment-manager-binding

subjects:

- kind: ServiceAccount

name: authorized-user

- kind: ServiceAccount

name: unauthorized-user

roleRef:

kind: Role

name: deployment-manager

apiGroup: rbac.authorization.k8s.io

- Apply

role-binding.yamlmanifest to the Service Accounts.

kubectl apply -f role-binding.yaml

Now, let us read secrets from both accounts. For that, we'll create pods based on the Service Accounts.

Create Kubernetes pods to access secrets

- Create a pod using

authorized-userservice account.

# pod-authorized-user.yaml

apiVersion: v1

kind: Pod

metadata:

name: app-pod

spec:

serviceAccountName: authorized-user

containers:

- name: nginx

image: registry.cloudyuga.guru/library/nginx:alpine

restartPolicy: Never

kubectl apply -f pod-authorized-user.yaml

kubectl get pods

- Now, exec into the app-pod of the

authorized-userservice account and try to access secrets through curl

kubectl exec -it app-pod -- sh

- Apply the following commands to access the secrets through curl from the pod.

cd /var/run/secrets/kubernetes.io/serviceaccount/

ls -la

export APISERVER=https://${KUBERNETES_SERVICE_HOST}

export SERVICEACCOUNT=/var/run/secrets/kubernetes.io/serviceaccount

export NAMESPACE=$(cat ${SERVICEACCOUNT}/namespace)

export TOKEN=$(cat ${SERVICEACCOUNT}/token)

export CACERT=${SERVICEACCOUNT}/ca.crt

curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/namespaces/${NAMESPACE}/secrets

- Let's decode the secret.

echo <encoded-secret-data> | base64 -d

Here we can read the username or password which are stored as a secret. Now, create an alert with Parseable to get notified when an unauthorized service account accesses the secret which can be resolved by fixing the misconfigured RBAC policy.

Set up alert

Use the following JSON code to set an alert from the Parseable UI. Click console -> config -> alert.

{

"version": "v1",

"alerts": [

{

"name": "Unauthorized access",

"message": "secret was accessed by an unauthorized user",

"rule": {

"config": "(verb =% \"list\" or verb =% \"get\") and (objectRef_resource = \"secrets\" and user_username !% \"authorized-user\")",

"type": "composite"

},

"targets": [

{

"type": "webhook",

"endpoint": "<webhook.site_custom_endpoint>",

"skip_tls_check": false,

"repeat": {

"interval": "10s",

"times": 5

}

}

]

}

]

}

Here, a authorized-user service account is allowed to access the secret. If a secret is accessed by another Service Account it will generate an alert. Setup the target endpoint by going through https://webhook.site and copying your unique URL. Paste it in place of <webhook.site_custom_endpoint>. Then click on the save button.

Next, we will try to access secrets from unauthorized-user Service Account and check with the unique URL for alerts.

Alert on unauthorized access

- Create another pod using

unauthorized-userservice account.

# pod-test-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

serviceAccountName: test-demo

containers:

- name: nginx

image: registry.cloudyuga.guru/library/nginx:alpine

restartPolicy: Never

kubectl apply -f pod-test-demo.yaml

kubectl get pods

Now, exec into the pod with the unauthorized-user service account as we have done previously.

kubectl exec -it parseable-pod -- sh

- Apply the following commands to access the secrets through curl from the pod,

cd /var/run/secrets/kubernetes.io/serviceaccount/

ls -la

export APISERVER=https://${KUBERNETES_SERVICE_HOST}

export SERVICEACCOUNT=/var/run/secrets/kubernetes.io/serviceaccount

export NAMESPACE=$(cat ${SERVICEACCOUNT}/namespace)

export TOKEN=$(cat ${SERVICEACCOUNT}/token)

export CACERT=${SERVICEACCOUNT}/ca.crt

curl --cacert ${CACERT} --header "Authorization: Bearer ${TOKEN}" -X GET ${APISERVER}/api/v1/namespaces/${NAMESPACE}/secrets

We accessed secrets through an unauthorized service account. How to know? To get detailed information about the issue, we need to check audit logs. So, let's verify how the above parseable setup can trigger an alert.

- Open the unique URL in a new tab and check the alert.

We can see an alert message unauthorized access triggered in auditdemo as shown in the above figure. We can also check the Kubernetes audit logs in Parseable UI. Through this, the cluster admin can find out the unauthorized activities in the cluster and fix them without taking much time.