Dagger is a relatively new developer tool from the original creator of Docker that's not part of the "infrastructure as code" world but what I like to call "code as infrastructure". Instead of defining infrastructure and what you want to do with it as a tangle of bash scripts and YAML files, you can use your programming language of choice.

Dagger calls everything you define, and it does as a "function", and each function runs ephemerally when called, often in a container or interacting with a container. This means that anything you create with Dagger is also portable. You can take the same code and move it between continuous integration (CI) platforms, run your own CI alternative on any hosting platform, and use your team's local computers.

This ephemeral and portable nature presents a perfect opportunity for a centralized logging system. Just like Parseable!

This post looks at some of the ways it's possible to integrate Dagger with Parseable, and as we plan to create a dedicated Dagger module, we ask for some feedback and ideas for the future.

Prerequisites

- Install Docker following the installation documentation.

- Install Dagger following the installation documentation.

- Follow the Dagger Quickstart. It helps you understand the basic concepts and gives an example application for the rest of this post.

Running Parseable ephemerally

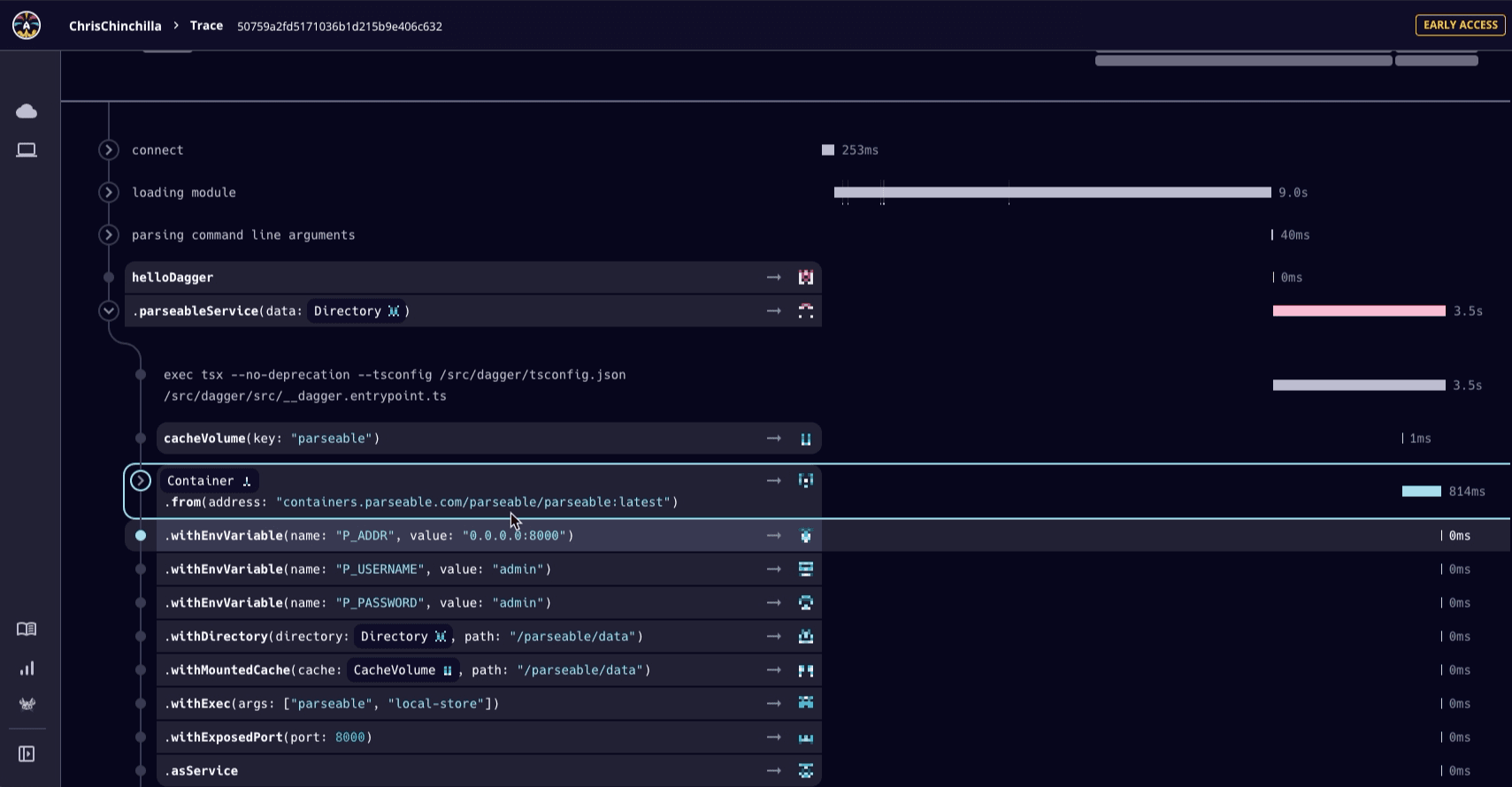

By default, the containers that Dagger creates are "just in time" and only run as long as they're needed. However, you can maintain state between runs. For example, if you wrote your Dagger functions in Typescript, create the following function:

@func()

parseableService(data: Directory): Service {

const parseableCache = dag.cacheVolume("parseable")

return dag

.container()

.from("containers.parseable.com/parseable/parseable:latest")

.withEnvVariable("P_ADDR", "0.0.0.0:8000")

.withDirectory("/parseable/data", data)

.withMountedCache("/parseable/data", parseableCache)

.withExec(["parseable", "local-store"])

.withExposedPort(8000)

.asService()

}

This code does quite a few things but mostly consists of chained methods that are part of the Dagger client, dag in this case. Each chained method does the following:

- Chained Dagger methods operate on a handful of core types, and

.container()indicates that these will work with an OCI-compliant container. .from()defines the image to use..withEnvVariable()defines the environment variables to pass to the container..withDirectory()mounts the local directory you specify into the path in the container.- You specify the directory with a

datacommand line argument, which allows for flexibility when you run the function. Note that theparseableService()function also takes thedatavariable as a parameter of typeDirectory. .withMountedCache()sets a folder in the container as the cache. In this case, that's the mounteddatadirectory, meaning that the Parseable data persists between restarts and between the container and the local machine..withExec()sets the command to run when starting the container, in this case, running Parseable inlocal-storemode..withExposedPort()sets the container port to be exposed to other containers..asService()runs the container as a service, which in the context of Dagger is something that provides TCP connectivity.

You can then run the function directly with the following command, passing the local directory with the data argument:

dagger call parseable-service --data="./staging"

But this isn't so useful, as Dagger creates the container but does nothing with it. Using it as a service from another function is more useful. For example, again in Typescript:

.withServiceBinding("parseable", this.parseableService())

This method binds the parseableService to this function and gives it the "parseable" alias, which you can then use within the function, for example, to make TCP requests using the alias instead of the IP address, which would be dynamic anyway.

This shows you how Parseable could run as a function in Dagger, but it's not that useful. While you can keep the data between runs persistent, by default, Dagger only keeps containers alive for as long as they're needed or a few seconds.

So, how do you use the web UI to query and analyze data?

One option is to run Parseable in Docker outside Dagger and mount the data directory. But perhaps a better idea is to instead keep Parseable running separately elsewhere and instead write data to it as needed. That's what I cover next.

Sending data from Dagger to Parseable

Start Parseable as a Docker container:

docker run -p 8000:8000 \

-v /tmp/parseable/data:/parseable/data \

-e P_FS_DIR=/parseable/data \

containers.parseable.com/parseable/parseable:latest \

parseable local-store

This replicates much of the same as in the previous step but in a Docker-native way.

To send data to the Parseable instance, you send POST requests to the API endpoint. To do this with Dagger, you need to issue the command from a container, so first, depending on the image used, the container that initiates this might need a dependency added. In both cases, you use the withExec method to make arbitrary commands in the container. For example, to add cURL as a dependency:

.withExec(["apk", "add", "curl"])

At this stage, you could just issue cURL commands the same way, but that reduces Dagger's flexibility and re-usability. Better is that you create a service binding again, but instead pass the URL to create the service.

Add the following to a function:

.withServiceBinding("parseable", svc)

This again creates an alias, but based on a svc variable of type Service. You also need to add this as a parameter to the containing function. For example, the buildEnv function from the Dagger quick start:

@func()

buildEnv(source: Directory, svc: Service

): Container {

…

}

Then to send a POST request, you again use the .withExec method but using the alias as the instance address:

.withExec([

"sh",

"-c",

`curl -X POST -H 'Authorization: Basic YWRtaW46YWRtaW4=' http://parseable/api/v1/logstream/dagger --data ${json}`])

Then, call the function and pass the URL parameter. For example, using the existing buildEnv function:

dagger call buildEnv --source=. --svc=tcp://host.docker.internal:8000

In this case, I pass the internal Docker Desktop address of the Parseable container running in Docker, but this could be any other accessible address.

Dagger functions also run code, which could log data to Parseable, for example, in the case of TypeScript, using the Bunyan plugin. This means that your application code and "infrastructure code" can all be written to Parseable.

Bonus: Send Dagger OpenTelemetry data to Parseable

Behind the scenes, Dagger generates OpenTelemetry (OTEL) data, and in the case of traces, it already sends these to a Cloud instance. Parseable can ingest OpenTelemetry logs sent to its /v1/logs endpoint.

This needs an OTEL collector running with the following configuration:

receivers:

otlp:

protocols:

grpc: null

http:

endpoint: 0.0.0.0:4318

exporters:

otlphttp:

endpoint: 'http://host.docker.internal:8000'

headers:

Authorization: 'Basic YWRtaW46YWRtaW4='

X-P-Log-Source: otel

X-P-Stream: 'otel'

Content-Type: application/json

encoding: json

tls:

insecure: true

processors:

batch: null

service:

pipelines:

logs:

receivers:

- otlp

processors:

- batch

exporters:

- otlphttp

This configuration assumes that Parseable is running in a container on Docker Desktop, using the default username and password and a log stream called "otel". This routes any OTEL traffic sent to the collector to the Parseable instance and the "otel" log stream.

Finally, you need to set two environment variables setting the logs endpoint and protocol wherever you run Dagger, which again assumes that the OTEL collector is running in a container on Docker Desktop:

OTEL_EXPORTER_OTLP_LOGS_PROTOCOL="http/json"

OTEL_EXPORTER_OTLP_LOGS_ENDPOINT="host.docker.internal:4318"

In theory, when you run Dagger now, it should send OTEL logs to the collector, which in turn then sends them to Parseable.

But unfortunately, there's a problem.

Parseable only supports receiving JSON data, and Dagger only sends grpc and protobuf data, so this setup results in an error. There may be a way to process the data and convert it with the collector before sending it on, but for now, that's a dead end.

Summary

As you saw in this post, using open standards and protocols such as HTTP APIs, programming language SDKs, and OTEL, it's possible to get it somewhat working. And as Dagger code is, in essence, not different from application code, you can also still use the world of dependencies and methods that exist in that language ecosystem. For example, mixing the Bunyan logger with the Dagger functions and logging outputs from those to Parseable.

Dagger also supports stdout() and stderr() functions from container output. You could send the output from these via a JSON payload to Parseable.

In the longer run, Parseable is looking into creating a Dagger module that integrates more directly, but for now, look at it as a combination to experiment with, and if you have any ideas or feedback on how they could integrate better, we'd love to hear from you!