AWS Lambda is a serverless compute service that allows you to run code in response to certain events. However, debugging, monitoring or audit logs of Lambda functions are tightly coupled to AWS CloudWatch, which is a leading cost driver for Enterprises. In this post, we will see how to use Parseable to ingest logs and store them in a cost effective manner on AWS S3 for longer and more meaningful analytics.

For the uninitiated, AWS Lambda offers virtualized runtime environments to run functions in response to events. This means functions can just run - without provisioning or managing servers, VMs or even containers. Lambda functions can be triggered in response to events such as HTTP requests, file uploads, database updates, and scheduled events. You can also run Lambda functions in response to events from other AWS services.

Lambda functions are stateless and run in a managed environment. There is no local drive or mount to store log data for even temporary access. Hence, AWS recommends Lambda extensions to ship logs to systems like AWS CloudWatch or other third-party log management systems.

In principle, this is a good idea. Users pay only for compute time consumed on the Lambda functions and log data management is delegated to another system. However, in practice, this leads to shrinking retention, poor visibility into Lambda functions, and high costs.

In this post, we'll look at specifically AWS Lambda and its CloudWatch based observability, issues and a new approach with Parseable.

Why do logs matter in this context?

Lambda is ephemeral by design. An event triggers a Lambda function, the function does its job and exits, there is no trace left of what happened. This is a black box for developers and SREs. It is hard to understand what happened and whether it was correct.

Serious users need visibility in what happened inside a function invocation:

- What was the input payload? Was it correct?

- Did the Lambda function update the storage / database / cache properly?

- Do we have a track of responses from external services, during the function execution?

- Can we track function invocations from different users?

- and so on.

Now multiply this by the number of Lambda functions and the number of developers - problem becomes much more complex. So, a logging and observability strategy is a must have.

AWS CloudWatch Logs

AWS anticipated this and offered deep integration between Lambda and CloudWatch. A simple flick of a switch and you have all the logs in CloudWatch, ready to be analyzed.However, there are few things to unpack here.

Cost

CloudWatch logs are priced on demand, like many AWS products. This means, costs are tied to the total ingested + scanned + stored log volume. This makes it extremely challenging to predict monthly costs. Even if you have modelled the price somehow, logs are highly dynamic, and simple events like a spike in traffic can cause a huge spike in costs.

Retention

Shrinking retention is a common practice to reduce costs. This often helps managing the cost, but this also leads to missed opportunity. Important information that could otherwise improve uptimes, customer experience, and security is lost.

Multi Cloud Unification

Just Lambda function logs in isolation are not very useful, you'd ideally want to take a look at the larger picture, i.e. logs across your infrastructure (AWS or otherwise). In fact, one of the key goals of log observability systems is to help you unify logs from all sources in your infrastructure, and provide a unified interface to view and analyze them. While AWS would very much prefer you unify all logs on CloudWatch, but that isn't feasible or even preferred most of the times.

Today every organization already has, or is looking at a multi cloud strategy, with a mix of public and private cloud.

Parseable Introduction

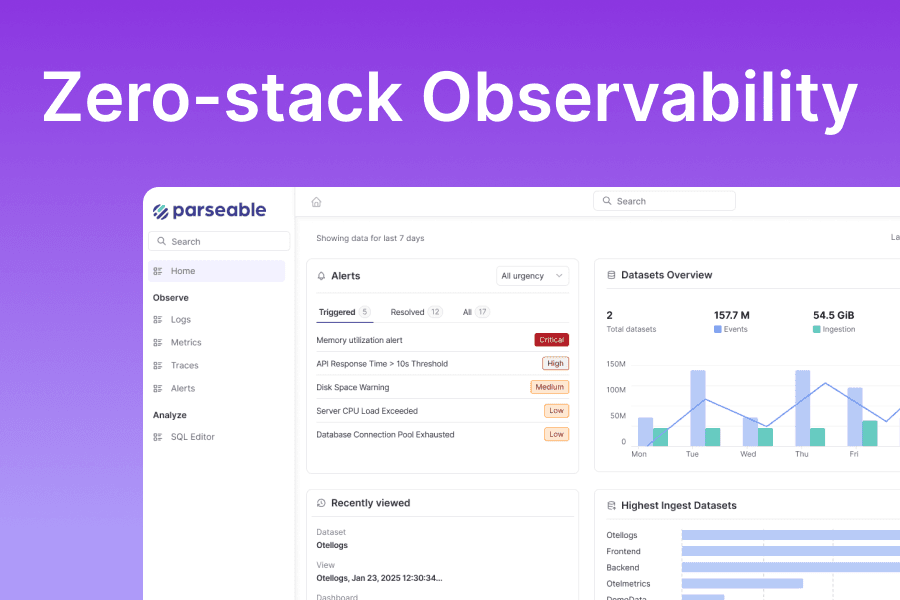

Parseable is a log management system that helps you ingest, store and analyze logs from any source. It is an open source log management system that can be deployed on any cloud. It is designed to be highly scalable, secure and cost effective.

You can ingest logs from a wide variety of sources including popular logging agents like Vector, FluentBit etc. Kafka, AWS Lambda etc. You can also ingest logs from any custom application using the Parseable HTTP API.

Log ingestion from AWS Lambda

Since this post is about AWS Lambda, we'll focus on that. Parseable can ingest logs from AWS Lambda using the AWS Lambda extension. The extension is a small binary that is bundled with the Lambda function and runs alongside the function. The extension can be configured to send logs to Parseable.

To use the parseable-lambda-extension with a lambda function, it must be configured as a layer. Additionally, you'll need a Parseable server up and running. Refer Parseable documentation for more details.

You can add the Parseable extension as a layer with the AWS CLI tool:

$ aws lambda update-code-configuration \

--function-name MyAwesomeFunction

--layers "arn:aws:lambda:<AWS_REGION>:724973952305:layer:parseable-lambda-extension-<ARCH>-<VERSION>:1"

Please remember to change the following values:

AWS_REGION - This must match the region of the Lambda function to which you are adding the extension.

ARCH - x86_64 or arm64.

VERSION - The version of the extension you want to use. Current version is v1.0. For current latest release v1.0, use the value v1-0.

The extension is configurable via environment variables set for your lambda function.

PARSEABLE_LOG_URL - Parseable endpoint URL. It should be set to https://<parseable-url>/api/v1/ingest. Change <parseable-url> to your Parseable instance URL. (required)

PARSEABLE_USERNAME - Username set for your Parseable instance. (required)

PARSEABLE_PASSWORD - Password set for your Parseable instance. (required)

PARSEABLE_LOG_STREAM - Parseable stream name where you want to ingest logs. (default: Lambda Function Name).

Conclusion

In this post, we looked at AWS Lambda and its observability challenges. We also looked at Parseable, an open source log management system that can be used to ingest logs from AWS Lambda and store them in a cost effective manner on AWS S3 for longer and more meaningful analytics.

I hope you found this post useful. If you have any questions or feedback, please feel free join us on Parseable Slack Community or GitHub.